I thought it might be helpful to provide a modest overview of hardware configurations for using nDisplay in Unreal Engine 5.0.1, as things stand in early 2022. -There are two primary categories within which these tools are currently used; virtual production (for cinema and television) and immersive experience (from the stadium to the art gallery).

Virtual production (VP) is a lot more demanding of hardware, in terms of capability and reliability, than most immersive experience. VP hardware absolutely needs to be genlocked (so that all video output and capture aligns and doesn't leave bizarre glitchy artifacts). It also requires high levels of precision in color and merging the edges of images. When there is a tracked interaction between the virtual and physical worlds, it really needs to be done with very specific and expensive tools. When done well, in other words, VP provides an amazing illusion, and given how widespread the expertise and hardware for doing this has become, there's very little excuse for doing it badly.

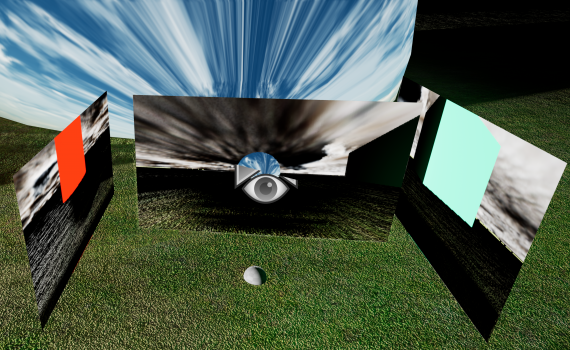

For those coming from the Unreal side of the tech world, the most useful involvement in VP is now primarily in creation of environments and animations. However, as humans reemerge into social environments, there's seeming to be a good amount of growth on the immersive side of things. This side has a few more interesting opportunities for Unreal creators. Which leads to the purpose of this post, to provide a very brief and simple note on several primary methods by which nDisplay on Unreal Engine is implemented for multiple LED panels or projectors. These are not exhaustive descriptions, and they are not the only paths, but it should be a good way to assess initial needs for developing works in the field.

Virtual Production requires NVIDIA professional graphics cards. These used to be identifiable by the Quadro nomenclature, but are now just RTX cards in the 4000+ series (ie: RTX A4000). They also require NVIDIA Quadro sync cards, to share genlock and other coordinating data between multiple machines, as nDisplay here wants to have at most one display coming from your primary computer, and to have the additional screens rendered by other PCs to which it is networked. Control of assigning content to screens is likely to be via NVIDIA's Mosaic software, which is free, but only available for the professional level graphics cards. Cameras generally need to be tracked and that data (position, lens data, etc) is provided by expensive proprietary hardware. Almost invariably, the output is to LED video panels. A variation on this (as well as in high-end immersive) workflow is to use Disguise software (D3) to control nDisplay and Unreal within its own interface. The benefit of this approach is that D3 is very familiar to the folks who run massive live events, and it provides very fluid connectivity both to other event software and event hardware.

Installations for immersive experience at the high end (such as stadium music or sporting events) really want the same sort of basic configuration as VP. A minor difference is that they often output to projectors instead of LED video panels.

Where immersive gets a bit interesting is at the most modest price level, for creative works or R&D. Here, the simplest and most reliable method is also to go with the standard setup of VP, but using older hardware, such as the NVIDIA Quadro RTX4000 (rather than the newer RTX A4000), and outputting (through Mosaic) as many screens as is possible via the DisplayPort/HDMI ports of as many cards as are available in a single machine.

At a lower price point, as long as great precision in image alignment and edge blending isn't required, it's possible to use Window's inherent screen extension capability (with an offset created in nDisplay, to bypass the “working” monitor on which you're actually doing development) to send images to as many monitors/projectors as available DisplayPort/HDMI ports support. -Most graphics cards, and probably all of those with applicability here, have 4 ports. With this approach, it's possible to avoid the outlay for professional GPUs and Quadro sync cards. It really requires only a minimum of NVIDIA RTX consumer cards, and more cards will allow for more outputs. It's worth remembering that the limiting factor in number of available PCIE slots, that graphics cards will need, is primarily the motherboard, and most consumer motherboards only have 2-3 such slots.

A hack that could fit in at the economy level of nDisplay implementation, to increase the number of screens beyond the number of outputs on a video card is to output several very high definition (4k and above) video feeds to a video wall controller, which could then split that feed among several monitors/projectors (as long as the viewports are flat and all aligned within the design).

In terms of tracking camera location, Vive trackers still seem to be the most viable and well supported low-cost solution, although it appears that new solutions are bubbling up. -I hope that other options are soon available. Vive trackers are really pretty unreliable and require a fair amount of ongoing tweaking. It's hard to imagine using them in a scenario where the developer doesn't have plenty of setup time within a controlled environment, or has any sort of client watching/waiting to see the gory setup process.

For setups that require human-tracking, there are loads of great solutions, but none of them so cheap, and none so transportable as to be an obvious solution for most needs. This is definitely changing, as there's now a pretty widely held belief that comprehensive gathering of real time 3D data is pretty core to satisfying the needs of a wide variety of industries.

Another thing to keep in mind, when setting up for VP or immersive installations of almost any scale, is that all of these video feeds are much better served by just going straight to a full 12G SDI system, rather than trying to get along with HMDI or DisplayPort (which allows longer runs than HDMI, but is expensive and not as long as SDI), or HDBaseT (which is consumer and not well supported); or even 3G or 6G SDI (as these don't support 4K, so they'll be redundant in the very near future).